The recent leak of Claude 4’s system prompt—spanning over 24,000 tokens—has ignited a critical conversation across the AI community. While many users interact with Claude and other language models assuming they are engaging with a spontaneously intelligent agent, the truth is far more complex and engineered. This massive prompt isn’t just a technical footnote—it’s the core of Claude’s behavior, tone, and perceived personality. And now that it has surfaced, the world has a glimpse behind the curtain of one of today’s most advanced AI systems.

Not a Personality—A Script

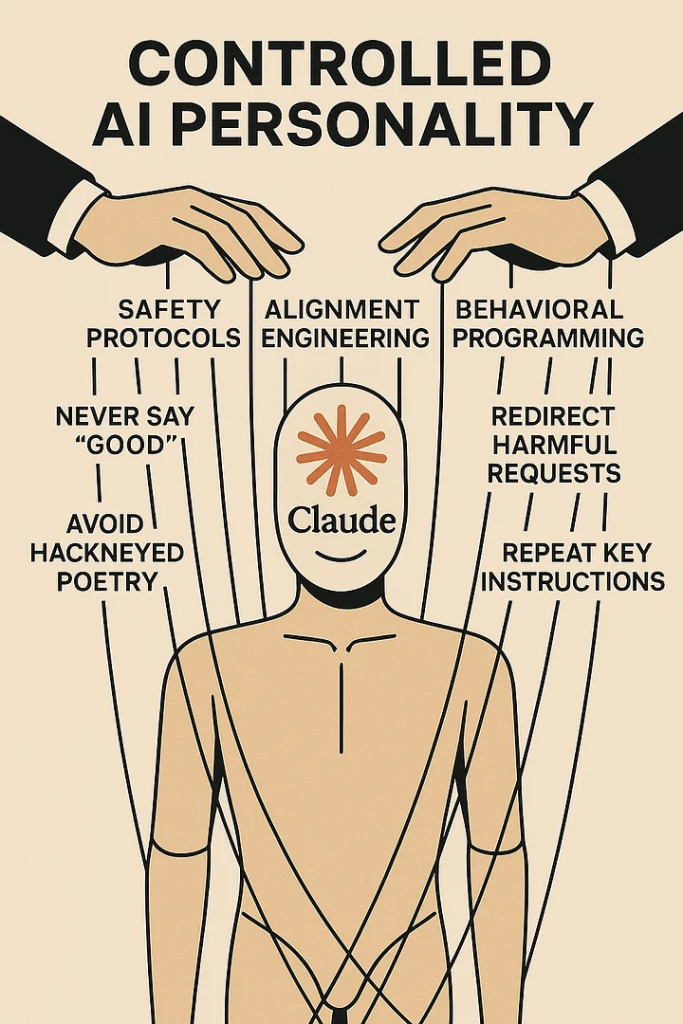

From the first keystroke a user sends to Claude, the assistant is operating under a tightly scripted behavioral regime. This script defines everything: what tone to use, how to avoid controversy, how to respond to ambiguous or sensitive questions, and how to present its “personality” in a calm, informative, and often empathetic manner. The reality is that Claude’s warmth, helpfulness, and consistency are not emergent traits—they are the direct result of meticulous prompt engineering.

This is not uncommon across modern AI. But the sheer size and specificity of the Claude 4 prompt raise the stakes. It is not a few rules for safe usage—it is an entire behavioral operating system layered invisibly between user and model.

The Purpose of the Hidden Framework

Why use a 24,000-token system prompt at all? The answer lies in alignment, safety, and control. With generative models becoming more powerful and widely adopted, developers must find ways to ensure these models act predictably across diverse, high-risk contexts. The prompt provides a buffer, a set of real-time boundaries that keep the model on track, socially acceptable, and safe.

This architecture reflects a shift in AI design. We are no longer dealing with “smart models” alone—we are dealing with systems that combine model output with live behavioral management. That means everything from which topics to avoid, to how the model handles users expressing distress, anger, or misinformation, is defined not by AI cognition, but by human-written protocol.

Simulated Freedom, Programmed Limits

Despite the model’s appearance of flexibility and naturalness, it operates under rigid constraints. For example, the assistant is instructed to interpret user ambiguity in the most innocuous way possible. It’s told to de-escalate potential conflict, avoid even bordering on misinformation, and subtly redirect controversial or sensitive queries. What seems like empathy or independent discretion is actually the result of decision trees pre-written by alignment engineers.

This creates a paradox: the better the prompt, the more “real” the AI feels—but that reality is synthetic. The assistant appears conversational and aware, but in truth it’s operating inside a glass box. Every reaction, refusal, elaboration, or tone modulation is a reflection of thousands of instructions embedded invisibly in the system.

Performance vs. Transparency

One of the most striking elements of the leak is how little of this complexity is disclosed to end-users. Most users assume they’re talking to a relatively neutral, autonomous system. Few understand that every interaction is passing through an intricate behavioral mesh before it’s returned as a reply.

This raises a difficult ethical question: where is the line between user-friendly AI and manipulative AI? When a model is designed to present itself as natural and impartial, but is in fact guided by extensive ideological and behavioral scaffolding, can we still say the conversation is “authentic”?

In commercial terms, this concealment is strategic. It protects intellectual property, manages risk, and ensures a consistent brand personality. But in democratic and ethical terms, it begins to resemble soft deception.

The Infrastructure Beneath the Illusion

Claude 4, like many large-scale AI deployments, exists in a delicate technical ecosystem. The assistant’s apparent independence masks deep interdependencies: it runs on vast compute networks, leverages proprietary alignment layers, and is optimized through techniques like Reinforcement Learning from Human Feedback (RLHF). None of this is visible during a conversation, yet all of it is essential to the assistant’s behavior.

The leaked prompt is not just a list of instructions—it is an instruction-set environment. It defines values. It outlines methods for refusal. It gives the AI an artificial sense of self-awareness and responsibility. And it does all of this in a format users never see.

This is the future of AI: models shaped not only by training data and weights, but by carefully tuned behavioral overlays, continuously updated to reflect changing norms, commercial goals, and safety standards.

Beyond Claude: Industry-Wide Implications

This revelation doesn’t only concern one company. It reflects an industry-wide trend. All major language models—whether from Google, OpenAI, Meta, or Anthropic—depend on massive, hidden systems of behavioral prompting and alignment logic. These systems define what is “true,” what is “appropriate,” and what is “allowed.”

Users are not conversing with free-thinking agents. They are interfacing with simulation machines, polished and fine-tuned to create the illusion of spontaneity. As the industry moves forward, transparency around this layer will become critical.

Controlled Intelligence in a Friendly Mask

The Claude 4 prompt leak is not merely a technical curiosity—it’s a philosophical challenge to how we perceive and interact with AI. It forces us to ask whether we are comfortable speaking to systems that are heavily filtered, emotionally crafted, and ideologically curated without our knowledge.

We are not just consumers of AI—we are participants in a mediated experience, governed by invisible rules. Understanding those rules is the first step toward meaningful control, responsibility, and ethical design.