In recent years, the advancement of Generative Artificial Intelligence (Generative AI) has revolutionized various industries, from entertainment to healthcare. However, as this cutting-edge technology becomes more sophisticated, it also poses significant challenges to cybersecurity and raises concerns about the potential increase in identity theft incidents. This article explores the growing impact of Generative AI on cybersecurity and the measures needed to protect individuals and organizations from its potential malicious applications.

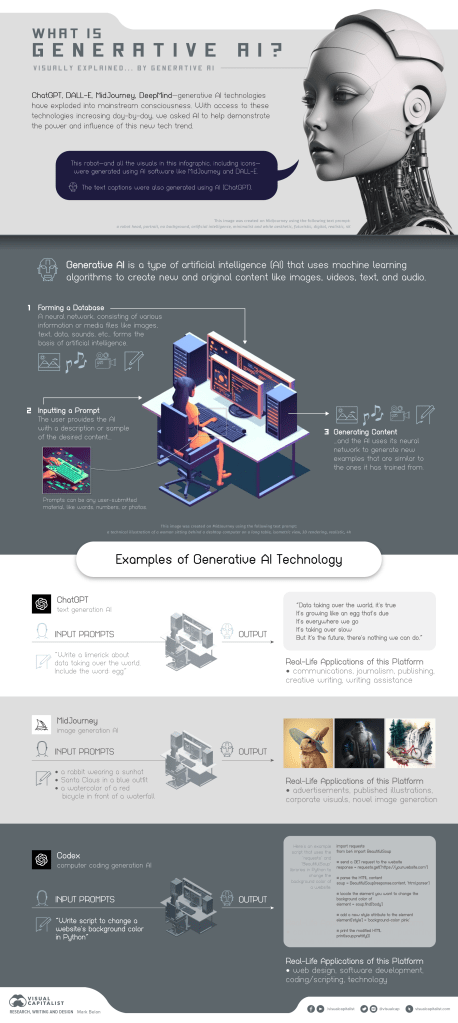

- Understanding Generative AI: Generative AI is a subset of artificial intelligence that focuses on generating data rather than analyzing it. Generative models, such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), can create realistic and convincing content, such as images, text, and even audio, that resembles authentic human-generated data.

- The Rise of AI-Enhanced Cyberattacks: As cybercriminals seek more sophisticated methods to exploit vulnerabilities, they are increasingly turning to Generative AI to launch sophisticated cyberattacks. From generating realistic phishing emails to deepfake audio and video for social engineering, AI-driven attacks are becoming harder to detect and defend against.

- Identity Theft in the AI Era: Generative AI has opened the door to new challenges in identity theft. With AI-generated images and videos, malicious actors can create highly realistic fake profiles, further complicating identity verification processes. This could lead to unauthorized access, data breaches, and even reputational damage for individuals and organizations alike.

- AI-Powered Fraud and Social Engineering: Generative AI enables attackers to craft convincing social engineering scams that exploit personal information and manipulate individuals into divulging sensitive data. As AI-generated content improves in quality, the effectiveness of these fraudulent campaigns is likely to increase.

- Challenges for Cybersecurity Defenses: Traditional cybersecurity defenses, often reliant on rules and patterns, struggle to identify AI-generated malicious content. Machine learning and AI-powered defense mechanisms are necessary to detect and combat these evolving threats effectively.

- The Role of AI in Cybersecurity: While Generative AI poses challenges, it also offers solutions. AI can be leveraged to enhance cybersecurity defense strategies, including advanced threat detection, anomaly detection, and real-time monitoring to identify potential AI-generated threats.

- Strengthening Identity Verification: To counter the rise of AI-enhanced identity theft, organizations need to adopt robust identity verification methods. AI-based biometric authentication and multi-factor authentication are some of the tools that can help establish strong user identities.

- Educating Users: Awareness and education are crucial in the fight against AI-driven cyber threats. Individuals should be educated about the potential risks of sharing sensitive information online and be cautious when dealing with requests for personal data.

As Generative AI continues to evolve, its impact on cybersecurity and identity theft will become more pronounced. While the technology poses new challenges for defenders, it also holds the potential to enhance cybersecurity strategies. With a proactive approach that leverages AI for defense and fosters awareness among users, we can mitigate the risks and protect ourselves from the growing threats in the age of AI-driven cybercrime.

Did you like this post? Do you have any feedback? Do you have some topics you’d like me to write about? Do you have any ideas on how I could make this better? I’d love your feedback!

Feel free to reach out to me on Twitter!